Ever watched a video while in a crowded subway, or better yet, during that really boring meeting, and because you were not in a situation where you could just listen to it, turned on captions? You used an accessibility feature! But what are captions anyways?

The Web Accessibility Initiative (WAI) describes them as:

synchronized visual and/or text alternative for both speech and non-speech audio information needed to understand the media content (“Understanding Success Criterion 1.2.2: Captions (Prerecorded)”)

Captions take the information provided by the audio track of a piece of media, and provide a text based alternative so that deaf and hard of hearing users can also have access to the information initially provided via sound. Other people benefit from captions, such as:

- Children and adults learning how to read. Captions have been proven to help increase literacy.

- People for whom the language in which the content is provided is not their first, such as native English speakers watching a video in Spanish. Captions help with comprehension.

- Everyone. Captions have been proven to help increase comprehension and retention of the information being presented (Gernsbacher).

Whether prerecorded or live we often use tools to help us automatically generate them. These tools may have drawbacks, however.

A Story

For example, while presenting about accessibility at the Indiana Tech Engineering and Computer Science Conference (ITECS) in Fort Wayne, Indiana I turned on auto captions during my presentation. (Both Powerpoint and Google Slides have an auto captioning system built in that anyone can turn on while they are giving a presentation). My experience brought forth a major shortcoming, though.

For those that know me, I have been known to use some profanity from time to time, and I was (since it is, in fact, a conference) censoring myself in that regard. The captions however, had a different idea.

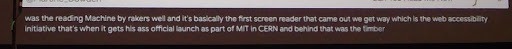

Let’s look at those captions on the screen a little bit closer:

...was the reading Machine by rakers well and it’s basically the first screen reader that came out we get way which is the web accessibility initiative that’s when it gets his ass official launch as part of MIT in CERN and behind that was the timber...

First and foremost, it sounds a lot like gibberish. You might deduce knowing that it was a presentation about accessibility that I was talking about the history of accessibility or the web, but I think we can all agree that there are some major flaws here.

First, the tool just dumps words. There is not a single punctuation mark, space, or indication of where the sentences start or end regardless of pauses in my speech. If you are hearing and following along, it might not be too bad, but with no sound, it is quite hard to follow.

Second, I think we can agree, that I was more than likely making a lot more sense than that while presenting. What happened is that it skipped and mistranscribed some words. “....reading Machine by rakers well and.” Was supposed to be: “reading machine by Ray Kurzwell”. Names seem to be something it really struggles with because it then fails again when it writes “part of MIT in CERN and behind that was the timber…” when talking about Sir Tim Berners-Lee.

The icing on the cake, though, is when it decided that I was talking about a donkey… “web accessibility initiative that’s when it gets his ass official launch”... and an official donkey at that! Lol!

What is the point of this story? It is not to scare you off of using auto captions. No, they are a great tool when other options are not available. BUT--and this is the important bit-- they are not good enough. They have huge shortcomings, as demonstrated above and on countless failblogs, lists, and articles. So what should we do?

Solutions

Captions

During live events, where the captioning needs to be instant, such as the scenario presented above, the best solution is to have a human live captioning the event. It is important to understand that automatically generated captions are not currently good enough to be a well implemented solution. They are a stop gap at best, and are not a replacement for manually transcribed captions.

If we are uploading videos, we can use the auto captioning tools either provided in our video editing tools or by the platform we are uploading too. This however is just a starting point. We must then go back through, verify and fix them for completeness and accuracy.

What about podcasts, or sound media that has no video you ask? Great question. Yep, there is a solution for those to. Transcripts.

Transcripts

Transcripts are

a text version of the speech and non-speech audio information needed to understand the content (“Transcripts in Making Audio and Video Media Accessible”)

Just like captions, many video and audio editing tools include the ability to generate transcripts but the same issues and rules apply. They need to be double-checked for completeness and accuracy by a human. An awesome side-effect of transcripts is that they help search results and SEO because the content of that audio file may now be indexed and searched. Transcripts can also be added to video content as well.

PS: No donkeys were harmed in the making of this blog post, official or otherwise.